Apache Kafka Tutorial: Getting Kafka Up and Running

Linear computer science theorists will agree with the line of thinking that as more data streams around the world, the difficulty in accessing resources on the internet will increase likewise. However, with platforms like Apache Kafka, the opposite is true. So much so that high-volume data networks of sizes previously considered impossible can perform with latency as low as between 3 ms and 2 ms.

Such upsides call for wider knowledge determination efforts through bootcamps, webinars, and tutorials, much like this one. This Apache Kafka tutorial walks you through the process of setting up your first live Kafka instance. Thereafter, knowing what’s possible, combined with APIs available to developers by default, it should be clear how disrupting Apache Kafka is in any industry it gets deployed in.

Before we get into the technical aspect of running Apache Kafka, let’s look at what makes it so attractive as a futuristic data storage and streaming platform.

Key Features of Apache Kafka

We used to think about the next age of databases as one giant central repository of data. On the other hand, Apache Kafka proposes a decentralized approach as the next stage in the evolution of data storage. This sort of architecture immediately imbues the objects stored with three key features:

- Fault tolerance

- High scalability

- Near-perfect availability

Firstly, by being decentralized, there’s no single storage location failure that can bring the data network to a screeching halt. Hence the very high resilience is due to tolerance of failure. The smaller resulting storage silos can then be deployed across wider networks than a single big alternative. This makes whatever systems are being carried by Kafka much more scalable.

In real life, companies using Apache Kafka display high-speed data access of huge data objects. Think of how huge movie video files can be accessed simultaneously and across the entire planet, as is the case with Netflix.

Getting Started With Apache Kafka

To get started with a live Kafka instance, you’ll need an environment that’s ready to run the latest versions of Java programs. (At the time of writing this Apache Kafka tutorial, this would be Java 8.) At the same time, you need to have Zookeeper installed as a management protocol for enclosed clusters and topics.

For the latest Kafka platform, use this resource landing page. After successfully installing Apache Kafka, run Zookeeper and initiate a fresh instance of Kafka with the following commands one after the other:

$ bin/zookeeper-server-start.sh config/zookeeper.properties

$ bin/kafka-server-start.sh config/server.properties

How well you implement Apache Kafka depends on how much you take advantage of its structure and methods. Let’s explore these in detail.

Understanding Apache Kafka’s Structure

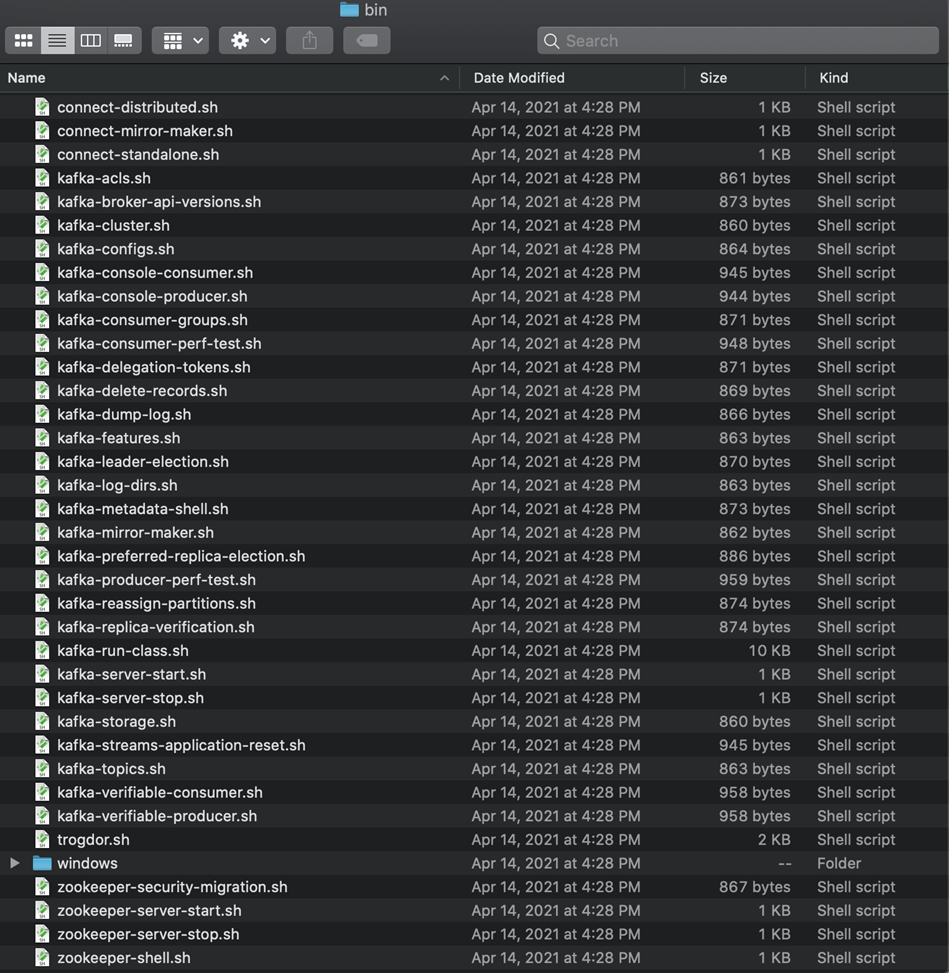

Once you download Kafka onto your admin workstation, the ./bin folder is where all features reside.

Apache Kafka ./bin directory

The first Kafka element we’ll zoom in on is the Topic. Think of a topic as the actual location in which data is stored. Within Kafka, unlike conventional database management systems, said data is referred to as events or messages. So instead of simply recording what your code manipulates, Apache Kafka tracks what happens to the objects.

At any given time, several topics take turns managing the events they share. This could be within a single region or even a wide exposure (across zones). This essentially creates a network of decentralized storage locations. Apache provides a handy Broker system to handle requests and navigation within topic clusters. The broker runs before the assignment of any topics or events in Kafka.

To create new events developers have to call the Producer element in Kafka. If you look closely at the bin folder contents in the screenshot above, you can see that the Consumer function would be your route to reading events stored within topics of interest. At this point, we have the first two blocks of a CRUD application down to a matter of fact.

Integrating Kafka Into Existing Systems

Say you have a dynamic silo of relational data stored somewhere, and you know that adding Kafka into the mix would push performance up. What then? For this instance, you can use the Connect function as a pipe to ingest and push events out between the two. Depending on your configurations of the Connect API, you can set up a persistent streaming pathway of data.

There wouldn’t be any point connecting tables to topics without some value addition through data processing. Just as the JOIN query helps construct new views in SQL databases, you can leverage the Kafka Streams library with astounding outcomes. This is the opportunity to harvest all the characteristics and benefits of Kafka in previously simple applications. Most developers will enjoy how the streams library contains enough examples to get apps up and running in no time, until such a time that they want to terminate an instance of Kafka altogether.

To shut down Kafka, one must turn each service in the order in which they launch. Following the plot herein, this would require closing any client services to which data is being relayed. If yours is an omnidirectional stream, the ingest pipe should be sealed. Then you close the broker process, and lastly the zookeeper. You can quickly close each stage by running Ctrl-C in the terminal.

Apache Kafka Use Cases

Knowing the basics, and having experienced a running Apache Kafka instance, here’s some inspiration in the form of case studies. Consider what’s possible and start creating new streams with Kafka.

Multimedia Streaming: Netflix

There’s no doubt that Netflix has so far made an indelible mark in the entertainment industry. Maintaining such a large reservoir of video files is no small feat—let alone being able to stream high-quality media while keeping some zone-based limits in real time. All of this requires huge bandwidth only possible with Apache Kafka.

Financial Services: Bank of America

Banks require very secure, yet always available, access to events around numerical data and user profiles. Using Kafka, banks such as Bank of America can optimize the availability of customer data across any point of access across the world with very high throughputs.

Handling Big Data: Trivago

With a worldwide network of hotels and other nodes associated with travel convenience, Trivago leverages Apache Kafka to give developers the access and libraries necessary to analyze copious amounts of data quickly whenever a traveler needs flight and hotel reservation information.

How to Get Your Team on the Kafka Route

The easiest way to get your team on the path to making Kafka enhanced applications is by trying it out on pilot projects. However, this could waste a lot of valuable time otherwise spent building legacy systems. To avoid technical debt, the best option for companies looking to jump onto Kafka is signing teams up for Apache Kafka Courses.

Not only would your team learn about Apache Kafka at the same time, but you benefit from the consultation of specialized experts on a one-to-one basis. A course or bootcamp, privately scheduled for convenience around your availability, is the best route of action.

The other alternative would be to announce the move to Kafka and pray that your developers find the time to learn enough such that you can start running the platform in production. But in this case, you’ll be exposing your code to too much experimentation.