Privacy, Profit, and Protection: Why Your Business May Not Survive Without a Private ChatGPT Clone

I don’t need to tell you that Generative AI systems using Large Language Models (LLMs) like Open AI’s ChatGPT v4 are exploding across every aspect of modern business. These models have carved out a niche, showcasing immense potential in varying fields, and for good reason: they represent one of the biggest sea changes in tech history.

With the meteoric rise in popularity of public LLM products, a critical question arises: Should organizations work on creating private LLM systems customized with their own internal data?

The unequivocal answer is yes.

It’s not a simple undertaking. Most organizations will need help leveraging the technology effectively. But the rewards can be huge: from cost savings to faster value delivery to enhanced customer satisfaction. So, by all means, get the help you need and start building your private GenAI app today.

Here’s why.

Why venture into private, customized LLMs?

Public LLMs like ChatGPT have brought the diverse benefits of GenAI to the forefront. However, they also raise significant privacy and security concerns. One of the major concerns is the potential misuse of data input by users. As these models learn and evolve with every interaction, the data you feed them can actually be accessed by third parties. “Data breach” isn’t a corner case with public LLM’s – it’s more or less a feature of the system. This situation becomes a breeding ground for privacy issues, especially when sensitive or proprietary information is involved.

Potential issues with using public LLMs

- Prompt Injection Vulnerability: Public LLMs are particularly susceptible to a type of attack known as prompt injection. This vulnerability could lead to the retention and leakage of sensitive information, which may be used inappropriately to retrain AI models.

- Privacy Preservation Gap: The soaring adoption of LLM applications has revealed a glaring gap in preserving the privacy of data processed by these models.

- Data Leakage Risk: There is a potential risk of data leakage with public LLMs as they might inadvertently memorize sensitive information from the training data.

- Data Security Principles: The data security principle of ‘least privilege’ is often at odds with the operational mechanism of public LLMs.

- Boundary Limitations: Public LLMs often lack clearly defined boundaries, contrasting with private LLMs that operate within specific data boundaries.

The compelling benefits

Private LLMs offer a banquet of benefits that are too enticing to overlook:

- Privacy Preservation: Transmitting data to a centralized LLM provider can sometimes be a gamble with privacy. There have been instances where companies like Samsung reportedly leaked secrets through public LLMs. On the other hand, a private LLM keeps your data in-house, significantly reducing such risks.

- Intellectual Property (IP) Retention: The problems and datasets that can be well-addressed by AI tend to be sensitive and proprietary. By deploying in-house models, organizations can keep their valuable IP under wraps while harnessing the power of AI.

- Cost Efficiency: Training an LLM from scratch or trying to use freeware can be a costly affair, especially when relying on cloud resources. However, using a private model with enterprise-grade commercial support can be a doorway to cost-efficient fine-tuning and retraining, aligning with the organization’s specific needs without breaking the bank.

Thriving examples in the industry

Companies are already treading the path of deploying private LLMs and reaping the benefits. The ability to create bespoke AI solutions has enabled them to stay ahead in the fiercely competitive market. Of course, most companies doing so are keeping details close to their chest. But we’re seeing it first hand at Cprime and our community of support and development partners:

- Atlassian has integrated Atlassian Intelligence into a number of their Cloud products, offering real-time virtual assistance that securely culls public and private data and knowledge base stores to help internal and external customers alike.

- Gitlab Duo applies the power of GenAI to support developer, security, and ops teams with everything from planning and code creation to testing, security, and monitoring, using AI-assisted workflows.

- ServiceNow has released the Now Intelligence platform to incorporate machine learning, natural language processing, search, data mining, and analytics to empower customer service representatives, internal support teams, and robust customer self service capabilities.

And these are just a few examples of a skyrocketing trend.

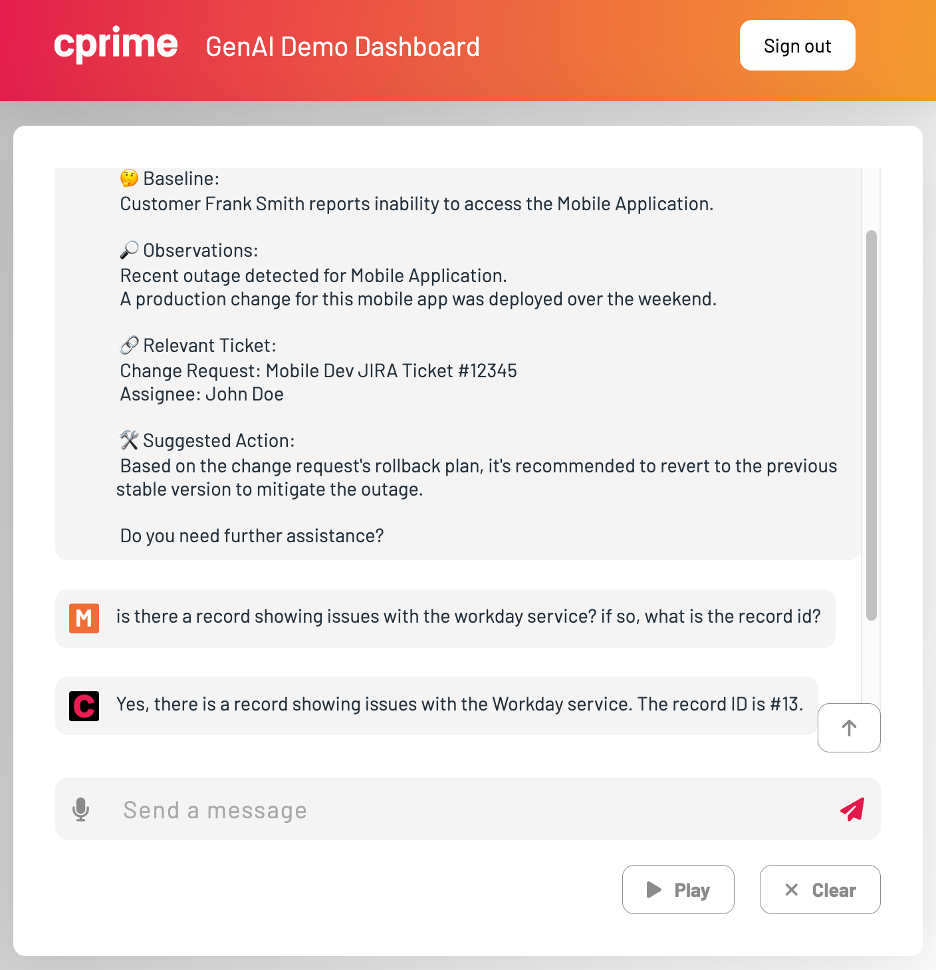

In fact, Cprime is also a leader in the bespoke AI space: we have developed our own private LLM framework in house, optimized for rapid deployment. Our “PrimeAI” system can help organizations quickly stand up a PoC with a private, customized LLM for surprisingly low cost, allowing them to experiment with the tech category while evaluating heavier-weight products from our partners.

The PrimeAI solution helps connect an entirely private world-class LLM to your own internal data sets, enabling you to cost-effectively explore a wide range of use cases while deciding how to proceed in the long term.

Harnessing the unseen potential

The journey towards developing a private LLM is not without challenges, but the payoff could be monumental. With the right resources and a keen eye on the evolving AI landscape, organizations can unlock a future where AI is not just an aid but a critical business ally.

Ready to dive into the world of private LLMs and chatbots? It’s an exciting yet demanding venture that promises a competitive edge in the fast-evolving tech landscape. The leading companies are already investing heavily in these technologies, recognizing the untold advantages they bring to the table. It’s high time your organization does too, embracing the AI-driven future with open arms.