From Monolith to Microservices and Beyond

Monolithic architectures were the de facto standard of how we built internet applications. Despite still being used nowadays, microservices have grown in popularity and are becoming the established architecture to build services. Service-oriented Architectures (SoA) are not new but the specialization into microservices, which are loosely coupled, and independently deployable smaller services, that focus on a single well-defined business case, became wildly popular since they enable:

- Faster delivery

- Isolation

- Scaling

- Culture

- Flexibility

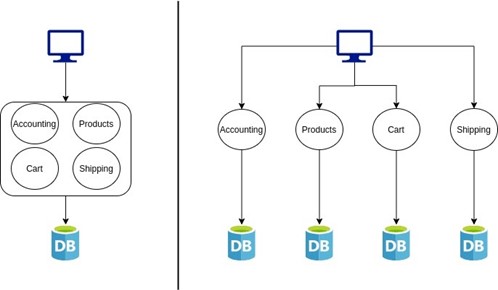

Distributed systems are complex. The above (simplified) diagram depicts how a monolith can be broken down into microservices but hides a lot of complexity, such as:

- Service discovery

- Load balancing

- Fault tolerance

- Distributed tracing

- Metrics

- Security

As systems grow bigger and bigger these challenges become exacerbated to the point where they become virtually impossible to tackle by teams focusing on specific business cases.

With the advent and popularization of containers, technologies emerged to tame the ever-growing operations demand, offering rich sets of features. Enter Kubernetes, the most popular container platform today, supported by every major cloud provider, offering:

- Automated rollouts and rollbacks

- Storage orchestration

- Automatic bin packing

- Self-healing

- Service discovery and load balancing

- Secret and configuration management

- Batch execution

- Horizontal scaling

Orchestration platforms like Kubernetes aim to ease the operational burden on teams while bringing some new development patterns to the mix. But while this is true, there’s some effort that still has to be put into operating services, from the development team’s point of view. Platforms like Kubernetes have their own “language” that needs to be understood so that applications can be deployed on it as well as a diverse set of configuration features and requirements. For example, for Kubernetes to optimally handle service lifecycle, services should provide health endpoints that Kubernetes will use to probe and decide when to restart such service.

Although Kubernetes was developed to orchestrate containers at large, it does not manage containers directly. Instead, it manages Pods which are groups of containers that share storage and network resources and have the same lifecycle. Kubernetes guarantees that all containers inside a Pod are co-located and co-scheduled and that they all run in a shared context.

These shared facilities between containers facilitate the adoption of patterns for composite containers:

- Sidecar – extend and enhance the main container, making it better (e.g filesystem sync)

- Ambassador – proxy connections to and from the outside world (e.g. HTTP requests)

- Adapter – standardize and normalize output from sources (e.g. data from centralized logging)

By going deeper into microservices architectures, with the ultimate goal of each microservice focusing only on its own business logic, we can hypothesize that some functionalities could be abstracted and wrapped around the business logic using the above composite patterns. In general, services require:

- Lifecycle management – deployments, rollbacks, configuration management, (auto)scaling

- Networking – service discovery, retries, timeouts, circuit breaking, dynamic routing, observability

- Resource binding – message transformation, protocol conversion, connectors

- Stateful Abstractions – application state, workflow management, distributed caching

By abstracting these functionalities to companion containers, organizations can onboard battle-tested industry solutions while their own microservices focus on differentiating business features. And there are already several projects exploring these new territories.

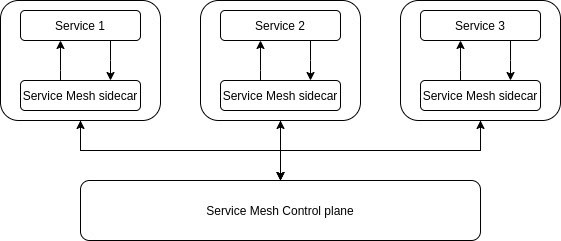

Service Mesh

A Service Mesh is a dedicated and configurable infrastructure layer with the intent of handling network-based communication between services. Istio and Linkerd are two examples of implementations. Most implementations usually have two main components: the Control plane and the Data plane. The Control plane manages and configures the proxies that compose the Data plane. Those Data plane proxies are deployed as sidecars and can provide functionalities like service discovery, retries, timeouts, circuit breaking, fault injection, and much more.

By using a Service Mesh, services can offload these concerns and focus on business rules. And since microservices can be developed using different languages and frameworks, by abstracting these functionalities, they do not have to be redeveloped and maintained for each scenario.

Serverless Computing

Serverless computing comes into play with the promise of freeing teams from having to deal with operational tasks. The general idea with Serverless computing is to be able to provide the service code, together with some minimal configuration, and the provider will take care of the operational aspects. Most cloud providers have serverless offerings and there are also serverless options on top of Kubernetes that use some of the patterns mentioned before. Some examples are Knative, Kubeless, or OpenFaaS.

Distributed Application Runtimes

Projects like Dapr aim to be the Holy Grail for application development. Their goal is to help developers build resilient services that run in the cloud. By codifying best practices for building microservices into independent and agnostic building blocks that can be used only if necessary, they allow services to be built using any language or framework and run anywhere.

They offer capabilities around networking (e.g. service discovery, retries), observability (e.g. tracing) as well as capabilities around resource binding like connectors to cloud APIs and publish/subscribe systems. Those functionalities can be provided to services via libraries or deployed using sidecars.

Microservices and Beyond

Microservices are entering an era of multi-runtime, where interactions between the business logic and the outside world are done through sidecars. Those sidecars offer a lot of abstractions around networking, lifecycle management, resource binding, and stateful abstractions. We get the benefits of microservices with bounded contexts handling their own piece of the puzzle.

Microservices will focus more and more on differentiating business logic, taking advantage of battle-tested, off-the-shelf sidecars that can be configured with a bit of YAML or JSON and updated easily since they’re not part of the service itself. Together they will compose the intricate web services that will power the future.