From Guesswork to ROI: The Critical Role of Metrics in AI-Driven Development

Companies across the globe are eagerly experimenting with various AI solutions. Pilots abound, some of them costing millions. Enthusiasm for this shiny new tech is at an all-time high. But there’s a problem: who’s measuring the actual return on investment (ROI) from these AI initiatives? Even after lengthy pilot programs with various AI tools like Github Copilot, many companies are considering expensive rollouts based, essentially, on hype and their teams’ gut feelings.

For savvy executives, that just won’t do.

This leap into AI—which reminds me of the early days of Agile adoption—begs the question: how can businesses assess of value of their AI investments without effective measurement?

The Importance of Metrics in Agile and AI

Without concrete metrics to gauge the improvements and ROI from AI tools, companies are navigating in the dark, making decisions based on hype rather than hard evidence. They’re risking financial resources, and (perhaps more importantly) they could miss out on genuinely transformative opportunities as a result. Without measurement, there is no visibility, and without visibility, there is no way to ensure that investments in AI are sound, strategic, and ultimately successful.

I clearly remember the path many organizations took in past years with Agile methodologies, and today’s rapid push toward integrating AI into software development processes is following the same course. Both require huge paradigm shifts in mindset, experimentation, and, crucially, a commitment to measurement.

In Agile, metrics like velocity, sprint burndown, and release burnup are great for gauging team performance, project progress, and overall efficiency. You can base decisions on these metrics, adapt strategies, and continuously improve. Based on the same pattern, the successful adoption of AI in software development demands we establish clear, relevant metrics and figure out how to monitor them effectively.

The Challenge of Measuring AI’s Impact

Applied to software development, AI tools can increase productivity, which is little easier to measure. But they can also enhance code quality, reduce the incidence of bugs, and facilitate more innovative solutions by freeing developers from repetitive tasks. These indirect benefits, are harder to quantify and incorporate into an ROI calculation, even though we intrinsically know they’re valuable. So, we don’t only need to measure the immediate impact of AI on development speed and efficiency; we also need to somehow capture its broader contributions to project outcomes and team dynamics.

The Solution: Integrated Measurement with CodeBoost and Allstacks

Organizations need a solution that enhances developer productivity but also integrates seamlessly with tools for comprehensive metrics. That’s the key to navigating the complexities of measuring AI’s impact on software development.

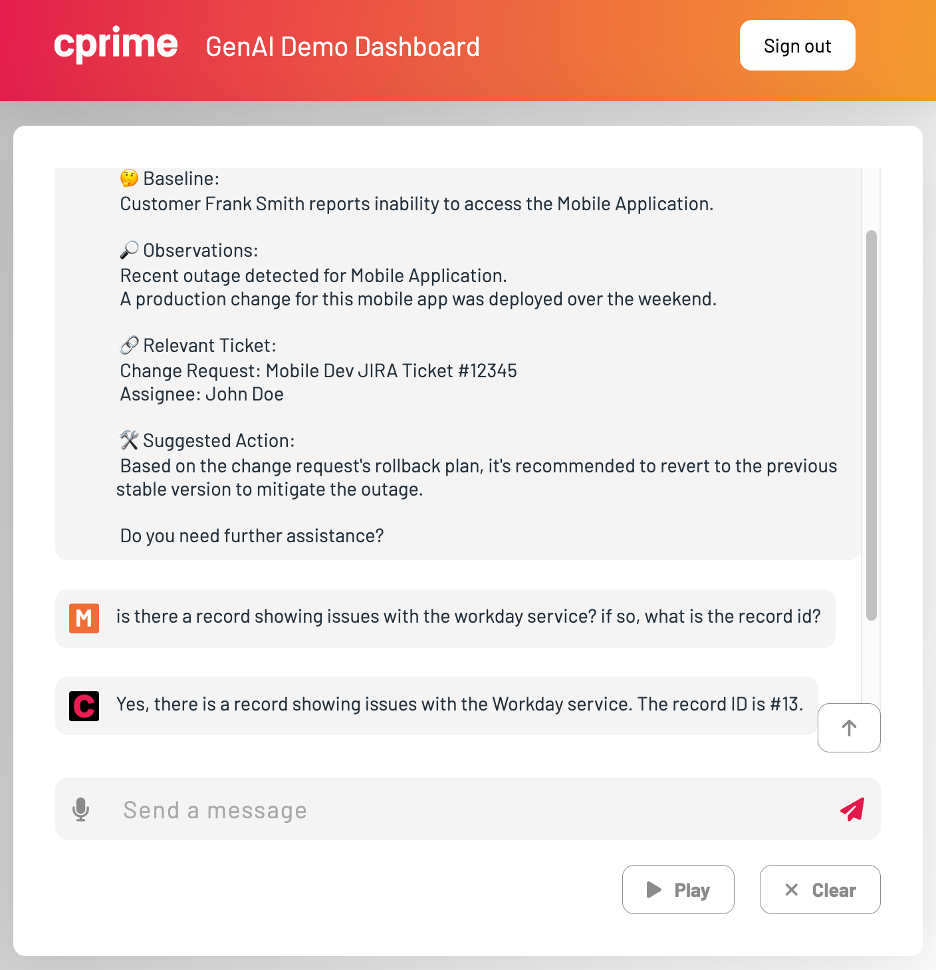

CodeBoost—our holistic framework, powered by CprimeAI—offers precisely this combination, letting you quantify the ROI of AI investments.

The CodeBoost framework does it all:

- Automating repetitive tasks

- Suggesting code improvements

- Facilitating faster debugging and code review processes

- Powering fast and high-quality user story generation

But there’s more. Beyond GitHub Copilot-style coding assistance, CodeBoost comes with industry-leading implementation and enablement services. It empowers development teams, getting them up and running quickly so you can see quantifiable results in as little as ten weeks.

We’re talking immediate efficiency gains, as you’d expect. But also improved code quality, and developer satisfaction increases over time.

But still doesn’t supply concrete measurement to prove all the claims I just made. That’s why the true power of CodeBoost lies in its seamless, baked-in integration with Allstacks. With comprehensive metrics automatically measured and monitored through Allstacks, the sky’s the limit.

Allstacks serves as the analytical backbone. You set a baseline at the start of a CodeBoost implementation, and Allstacks provides ongoing automatic reporting throughout the pilot and beyond. It tracks key performance indicators (KPIs) relevant to software development, such as time saved on coding tasks, reduction in bugs or errors, and improvements in project delivery timelines.

This ability is further enhanced by custom reporting capabilities that tailor metrics to your organization’s specific needs and goals. Adoption rate, decrease in time to market for new features, the reduction in technical debt, and more—Allstacks provides the flexibility to focus on the metrics that matter most.

With this integrated approach to measurement, there’s no question about the value of CodeBoost. Developers appreciate a quality tool that makes their lives easier, while executives have clear, data-driven insights into the ROI of their AI investment.

It’s a win-win scenario.

What’s Your Next Step?

By setting clear metrics from the outset and leveraging ongoing, automatic reporting, you can confidently navigate the complexities of AI adoption, making informed decisions that align with your strategic goals.

We’re excited by the results we’ve already seen just months into the rollout of CodeBoost. If you’d like a custom demo of CodeBoost to see what it can do for you, just respond in the comments or reach out to me personally!